How to Add Robots.txt in Django Easy Way

Hi Everyone, In today’s tutorial I am sharing a simple and quick way to add robots.txt file in your django project. Before diving further, you must have an idea why robots.txt is needed and what exactly a robots.txt file looks like. I will provide a sample of robots.txt file in this tutorial though.

Creating Robots app is an easy way to add robots.txt in django website

Step 1:

Create an app named robots from django shell

python manage.py startapp robots

add this app to INSTALLED_APPS in settings.py

INSTALLED_APPS = [ ..... , 'robots', ]

Step 2:

Create file name urls.py inside the robots folder (this folder will be created inside your project automatically when you create an app in step1.)

Add below lines to urls.py:

from django.urls import path

from . import views

urlpatterns = [

path('', views.robots, name='robots'),

]

Step 3:

Open view.py from robots folder and add following code (change khalsalabs.com to your website name in the following code):

from django.shortcuts import render, HttpResponse

# Create your views here.

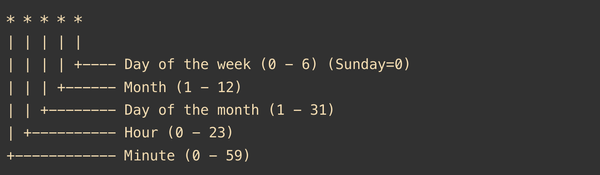

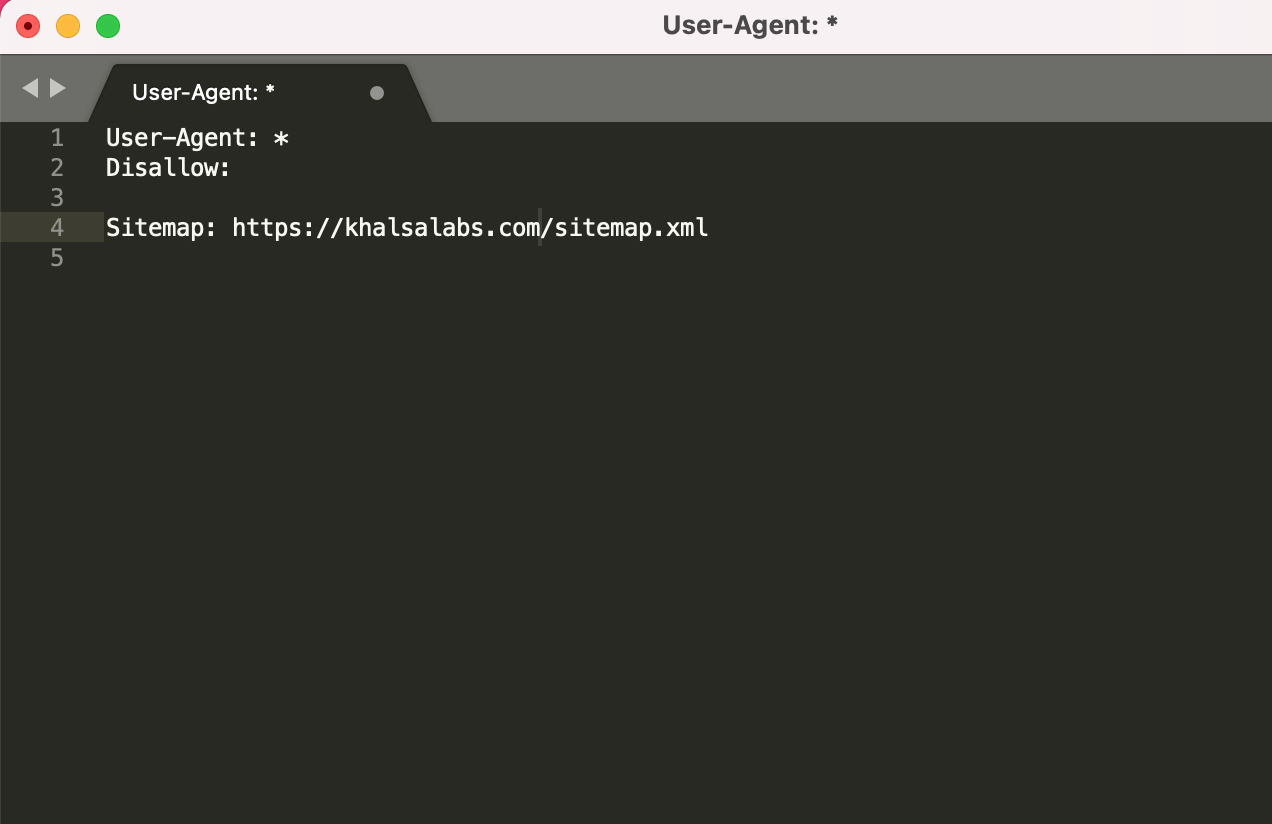

robots_file = '''User-Agent: *

Disallow:

Sitemap: https://khalsalabs.com/sitemap.xml

'''

def robots(request):

content = robots_file

return HttpResponse(content, content_type='text/plain')

Step 4:

In your projects urls.py (urls file of main project)

Add single line in the end under urlpatterns followed by ,

path('robots.txt', include('robots.urls'))

TADA !! your are done

Now check your file at http://localhost:8000/robots.txt or http://